Updated on February 11, 2025

Large language models like ChatGPT(OpenAI), Bard(Google), Claude(Anthropic), and LLaMA(Meta) have seen a significant rise in its usage across different industries. One can easily ask these chatbots to write code for a mobile app or help summarise a 1,000 pages book in seconds. But healthcare is a very complex industry. Healthcare providers are still considering this new technology like healthcare chatbot before rolling it out to the public as any incorrect and unsupervised response might affect a patient in the wrong way.

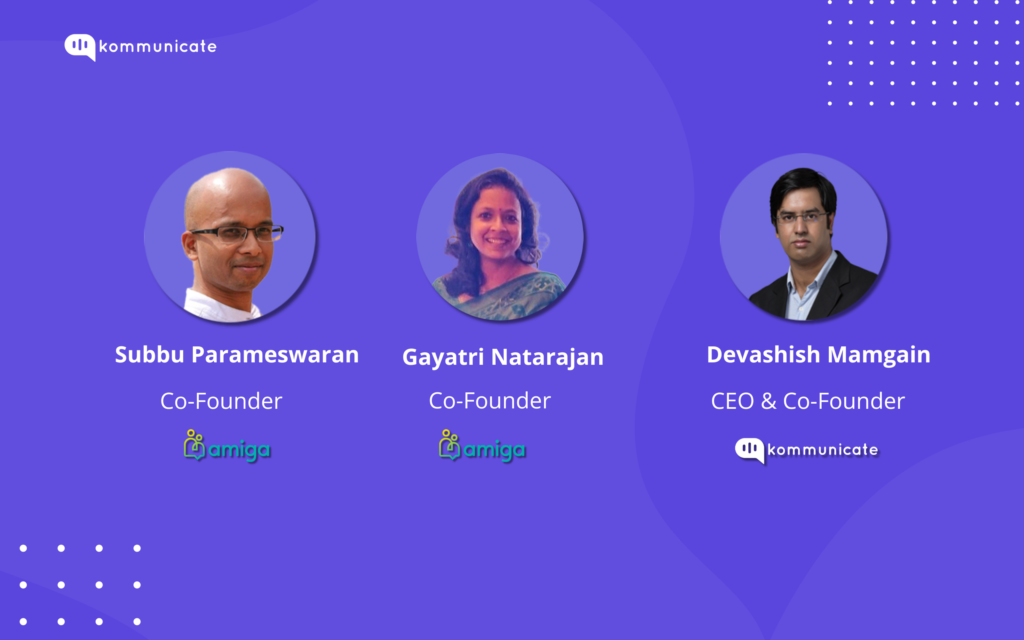

Kommunicate invited mental health advocates Subbu Parameswaran and Gayatri Natarajan, co-founders of Amiga, to get their perspectives on the growing use of Generative AI and LLM (Large Language Models) in healthcare. Amiga is a conversational AI-powered mobile app that helps parents better understand their children’s behaviour. The session was joined by Kommunicate‘s CEO and Co-Founder Devashish Mamgain to bring in a technology expert’s perspective on using conversational AI in healthcare. To watch the entire session, click here.

Here’s a summary of the entire conversation –

What are your thoughts on the state of AI in healthcare today?

Subbu began by stating that this new conversational AI technology is going to change humanity for good. The public’s perception of AI changed drastically after November 2022, when OpenAI made ChatGPT available for everyone. People started feeling the power of artificial intelligence, which they took for granted before.

“Chatbots powered by conversational AI have come a long way since their inception when the bot was only rule-based. Chatbots were used only as FAQ bots. Now with OpenAI’s large language model, not only the chatbot answers FAQs, it can get our work done faster and more efficiently. If promoted correctly, you can create an app with ChatGPT’s help. Or, you can get a summary of a long research paper in a very consumable manner that is easy for everyone to understand.”, said Devashish.

For those in the healthcare industry, this evolution has created incredible opportunities. AI-powered chatbots and customer service email automation can now assist in scheduling, patient engagement, and more complex tasks like symptom assessment and first-level care. For a deeper understanding of how to build and implement such tools, you can refer to this healthcare chatbot guide, which offers insights and a step-by-step approach to creating your own healthcare chatbot solution, including incorporating AI-powered email ticketing for efficient customer support.

We asked Gayatri and Subbu how they came up with the idea of using conversational AI to solve parenting.

“We wanted to make parenting accessible‘. Subbu elaborated that they wanted to give parents an app that is available 24/7. He said that mental health is a serious problem, and people are shy about it. He also noted that people mistake mental health for mental disorders.

Adding on top, Gayatri mentioned that most parents avoid seeking mental health help. They don’t want to portray the picture that their child is going through a phase. These parents needed privacy and the unbiased and judgement-free partner that Amiga is. An app that converses with parents just like any therapist will do. The only difference, it is available 24/7 and budget-friendly.

Parents across different geographies have unique ways of parenting. How does Amiga fit in there?

While handling children might differ across countries, the child’s behaviour will stay the same. And only because their parenting is not working, they come to Amiga to try a new way of handling these issues.

Cultural context is essential to solve this problem. We have built the app keeping the Indian-Asian parenting style in mind. Our idea is to enhance the bot further so that it identifies where the user is logging in from, and based on his location, the bot starts conversing in the manner of that specific geolocation.

Where else do you see healthcare businesses using Conversational AI?

Conversational AI helps hospitals and clinics schedule appointments, remind patients to take medication, or deliver reports on messaging platforms. Hospital managements are now getting real-time patient journey feedback by gaining true feedback on channels like WhatsApp and SMS. Pharmaceutical and biotech companies use large language models to summarise research papers that are easier to consume. Staff are using AI to get real-time answers to complex cases for immediate action.

Take pharmaceutical companies as an example. They use conversational AI technology for clinical trials where they reach out to volunteers through omni channel platforms. These large pharmaceutical and biotech companies use large language models and AI to identify the right person for the proper trial.

Subbu worked in pharmaceuticals before starting Amiga. He said that the two critical challenges in a clinical trial are finding volunteers and tackling the biases involved with the respondents. Also, by the time the trial gets over, a voluminous amount of data gets collected, and it used to take 3-4 months to summarise that data. Now with large language models like Claude, ChatGPT and Bard, these summaries can be prepared in minutes. AI has really paced up the process.

How far should we push AI?

“AI is a tool and we should treat it as a tool. It should be used to enhance the productivity of workers and not to replace them”, said Gayatri.

Subbu – “From the time since nuclear fusion was invented, we could light up cities for centuries or destroy them within seconds; that’s how AI can should be seen today. There are ethical issues with these large language models. If the models are tamed in adjustment to a few specific person’s needs then it is definitely going to harm humanity. It is up to regulators to contain how far we should go. The moment AI gets into your personal life, and people start taking advantage of it, that is precisely where we should draw a line. There’s accuracy concern in healthcare. What if the AI gives incorrect diagnoses to patients?”

Devashish was of the view that AI can never replace humans. AI is going to work along with human beings. He envisions a world where we will complement each other. For LLMs, it requires a vast dataset to train itself, but a small human child only needs a little information to differentiate between a cat and a dog. The human brain is far more complex and intelligent than many AI in the market.

Devashish thinks there is a lot more for the AI in the future. Obviously, every technology has its rights and wrongs. Nothing is always perfect with technology. In healthcare, accuracy will play a crucial role. European Union has already put regulations on the use of AI. Regulators in every country should step in and create laws on AI usage.

Our session ended with one last question for all three panellists.

How can we bring a balance between AI and humans?

“Finding a balance between humans and AI is tricky. AIs are trained in such a way that they can do tasks way much faster than humans. On the other hand, a human cannot summarize a research paper in minutes. Similarly, an AI cannot answer a question it has never been trained on. And this is where there should be a handoff between the AI and a human. When the query gets personal or ethical, a human should jump into the conversation and take over”, said Subbu.

Gayatri - “Humans should act as fact checkers whenever a conversation happens between an AI and a patient. It should be a regular task for humans to review the conversations happening. And the only way to do that is to keep up with AI's speed. The day humans will be able to keep up with the pace of AI, that is where there will be balance. Today, we cannot check every conversation an AI bot is doing; it's impossible.”

Devashish - “As the AI improves and gets fed more data, the AI will make fewer errors. There should always be an option to know that a user is interacting with a machine. We see companies using human faces for AI chatbots which misguides the users. The users should be aware that they are conversing with an AI chatbot, and not an actual human. And that's why at Kommunicate, we have built a system that embraces human & AI collaboration for safer and superior experiences.”

To summarise, generative AI and LLM(Large Language Models) will surely be incorporated into healthcare businesses. The only question will be how we are going to regularize it? Government bodies will have to work with healthcare, biotech and pharmaceutical companies to bring stricter regulation on its usage that is constantly being reviewed for errors.

Manab leads the Product Marketing efforts at Kommunicate. He is intrigued by the developments in the space of AI and envisions a world where AI & human works together.