Updated on January 19, 2024

Artificial intelligence has been in the news a lot recently, especially since the launch of ChatGPT and Bard. Conversational AI, which is a subset of artificial intelligence, is also making incremental leaps every day.

Conversational AI allows for natural conversations between humans and machines. Its integration into healthcare has proven revolutionary, enabling AI-powered email ticketing for patient queries, enhancing workflows, and improving engagement. This evolution showcases how customer service email automation principles are now benefiting critical sectors like healthcare.

Conversational AI can serve as a virtual agent, answering queries, giving out medical advice, and in some cases, helping medicos diagnose a particular ailment.

The use of Conversational AI has made many healthcare processes a lot more efficient, while streamlining workflows and enhancing patient engagement. Additionally, the integration of healthcare digital signage with Conversational AI, as highlighted in our healthcare chatbot guide, can improve the dissemination of information within healthcare facilities. Digital signage can be used to display real-time updates, health tips, and personalized messages, further enhancing patient experience and engagement.

However, there are a few ethical considerations that pose challenges to the widespread adoption of Conversational AI in the healthcare digital signage segment.

We take a look at these challenges in this blog post, along with the privacy and data security concerns that many people have.

Privacy concerns

Healthcare data is one of the most sensitive types of data there is. In the year 2022 alone, there have been 707 data breaches that exposed 500 or more records in the US alone.

As the threat from digital criminals looms large, organizations and individuals are getting more and more concerned with using Conversational AI in healthcare. Some of these concerns are:

- Data breach

A data breach is when a third party accesses the private information that patients provide to a healthcare institute, without the institute’s consent. Famous data breaches in the US include the Tricare data breach that affected 5 million patients and the CHS data breach, reportedly done by the Chinese.

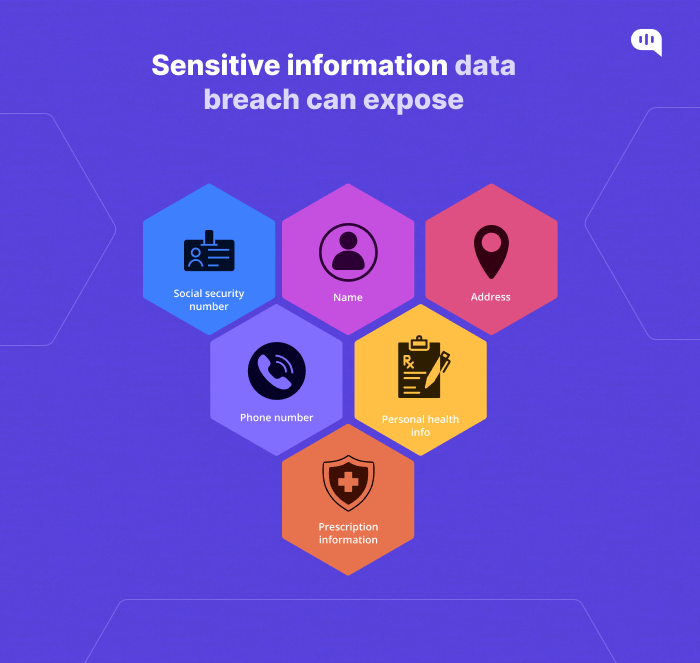

A data breach can expose a lot of sensitive information to a cyber criminal, which may include details such as:

- Social security number.

- Name

- Address

- Phone number

- Personal health information

- Prescription information

These are just some of the details that a cyberattack may expose, and vested parties can then use this information or sell it on the dark web.

Case in point,the Medibank breach that happened as recently as 2022. Around 5 GB of sensitive patient information was given to a Russian party on an anonymous internet forum when Medibank did not pay a ransom. Medibank lost $1.8 billion in market capitalization as investors came to terms with the seriousness of the breach.

Cases like this only reiterate the importance of having sophisticated measures in place to protect patient information.

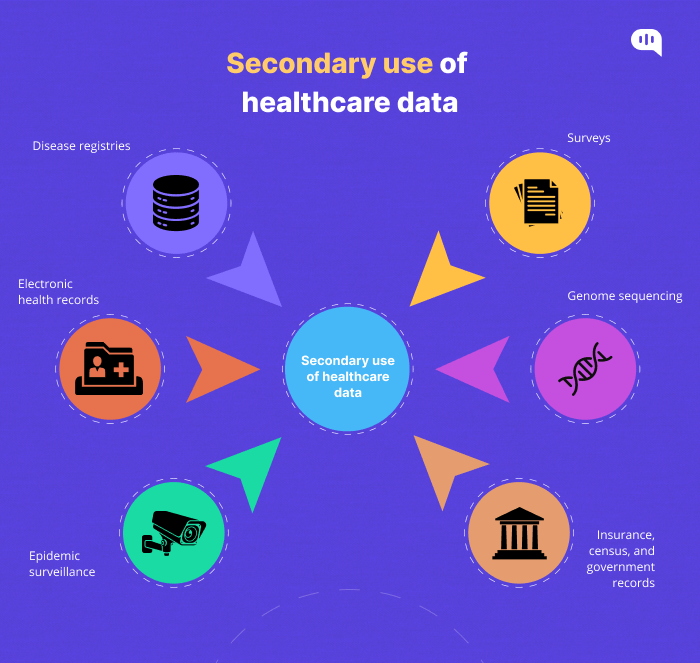

2. Secondary use of healthcare data

Secondary data is data that organizations or individuals use for a purpose other than which it was collected for. For instance, if you use a Smartwatch, chances are that all of your vitals such as heart rate, exercise patterns, sleep patterns, etc. are in the possession of the smartphone manufacturer.

Now, if the manufacturer decides to share this data with a third party who manufactures drugs, then that is secondary use. Using advanced cloud computing and machine learning techniques, scientists can use this data to detect emerging diseases.

But, in the wrong hands, this data is a dangerous asset that the highest bidder can buy online. If the third party decides to send you marketing material of their product on the basis of your health data, then that is unethical use of your data.

Secondary use of healthcare data is thus a very sensitive issue, and using conversational AI to collect this data comes with its own set of challenges.

3. Unauthorized use of patient data

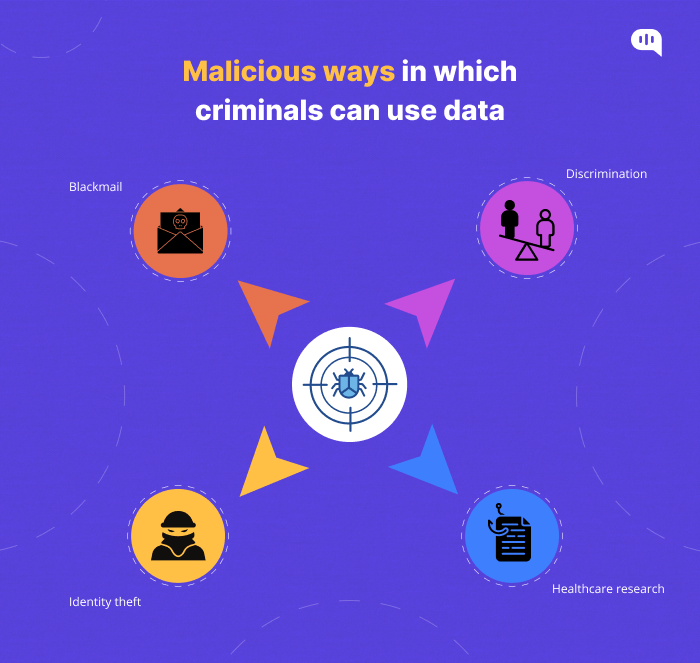

Cybercriminals can gain access to protected health information (PHI) through unauthorized means such as phishing, ransomware attacks and malware attacks. The criminals can then sell this data, or use it for other malicious purposes.

And you don’t necessarily need to be a cyber criminal to access PHI without authorization. A disgruntled employee at a healthcare organization may also be able to access PHI and then sell it to the highest bidder.

Data leak can also occur through human error, where a healthcare worker may send sensitive information about a patient to another party by mistake.

Some of the malicious ways in which criminals can use this data includes:

- Blackmail

- Identity theft

- Discrimination

- Healthcare research without the patient’s permission

Not just financial loss, unauthorized use of patient data can take an emotional toll on patients, and you cannot put a price tag on that.

Security considerations

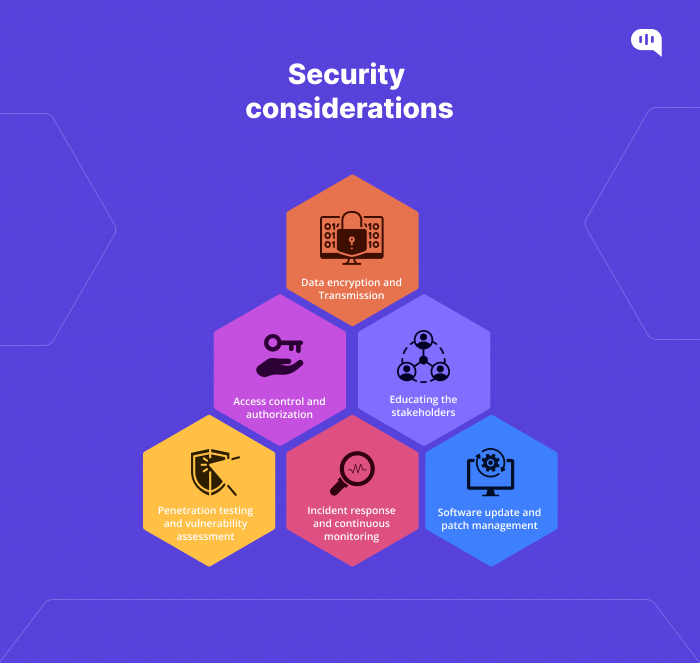

Here are some of the Security considerations within Conversational AI for healthcare:

1. Data encryption and Transmission

Patient data must undergo encryption at both rest and in transit. Anyone without encryption should not be able to access this healthcare data. End-to-end encryption ensures that patient data remains confidential during transition between servers and devices.

2. Access control and authorization

Only authorized personnel should have access to conversational AI systems and the patient data they store. This access must further be restricted to role-based so that sensitive patient information is only available on a need-to-have basis.

3. Educating the stakeholders

Educate both healthcare professionals and patients on the security risks associated with using conversational AI platforms to store and process PHI. Resources and guidelines must be given to help all the stakeholders understand the risks involved in suspicious activities such as phishing attempts.

4. Penetration testing and vulnerability assessment

Every system ever designed has potential security loopholes, and these must be addressed with regular vulnerability assessment. Proactive system testing helps developers identify these security risks and mitigate them before they become serious. Measures like extensive penetration testing can ensure that malicious attackers don’t get access to sensitive information by exploiting system weaknesses.

5. Incident response and continuous monitoring

In case of a security breach, healthcare institutes must have an incident response plan. Also, there must be continuous monitoring of Conversational AI systems to detect any anomalies in the system promptly. The incident response plan must explain in detail how the healthcare organization will notify patients in case of a leak, and how it will contain it.

6. Software update and patch management

It is vital to update your software to keep the Conversational AI systems secure. Developers must quickly address security issues that they identify and release updates. This will ensure the system’s protection against emerging threats.

Incorporating these security measures will ensures that Conversational AI in healthcare is implemented in a secure environment, where patients and healthcare professionals don’t have to worry about security breaches.

Trust

Maintaining an environment of trust between healthcare professionals, patients and AI systems is essential to integrate Conversational AI into the healthcare landscape. Here are some of the factors that help establish trust:

1. Transparency

Healthcare professionals must know the capabilities and limitations of the system in which Conversational AI implementation takes place. Patients must specifically know why a particular treatment method’s recommendation took place. This will increase their trust in the healthcare system’s decision making process.

2. Accountability and Continuous monitoring

Continuous monitoring of AI system’s performance is the need of the hour, to ensure they adhere to the highest ethical standards and guidelines. Developers and healthcare organizations are responsible for the AI’s actions, fostering trust through a sense of responsibility.

3. Value alignment

Conversational AI systems should be used to promote patient safety, autonomy and well-being. They must thus have alignment with the values of both patients and healthcare organizations.

4. Empathy

Empathy is one of the key traits that a Conversational AI tool must possess in order to gain trust from its users. If a Conversational AI system understands and appeals to the emotional states of patients, there is an enhancement in the user experience and trust.

By prioritizing transparency, collaboration, empathy, and value alignment, Conversational AI Systems can foster trust among healthcare professionals and patients.

Thus, technology is not just a tool, but a trusted partner when it comes to delivering high-quality patient-centered care.

At Kommunicate, we envision a world-beating customer support solution to empower the new era of customer support. We would love to have you on board to have a first-hand experience of Kommunicate. You can signup here and start delighting your customers right away.

Naveen is an accomplished senior content writer with a flair for crafting compelling and engaging content. With over 8 years of experience in the field, he has honed his skills in creating high-quality content across various industries and platforms.