Updated on February 21, 2025

Whenever we use AI in customer service, we use some documents or websites to train the AI. And while companies like Intercom have set the bar for AI-driven customer service solutions, we at Kommunicate have pushed the boundaries further, achieving even better document and URL scanning accuracy.

In this article, We will walk you through how we built a more efficient system at Kommunicate using Retrieval-Augmented Generation (RAG), from creating embeddings to scraping documents and websites and filtering results before sending them to an LLM0.

We’ll also explain why we’re seeing significantly better results than Intercom. We’ll cover:

1. Why RAG is Key for Better AI Responses?

2. How Did We Set up an Enterprise RAG Pipeline?

3. How Do We Measure the Accuracy of Answers?

4. The Results: Kommunicate v/s Intercom

5. The Verdict

Why RAG is Key for Better AI Responses?

Before diving into the technical details, let’s start with a quick overview of Retrieval-Augmented Generation (RAG). RAG is a system that improves AI performance by combining retrieval-based methods with generative models. Rather than relying solely on pre-trained knowledge, the idea is to fetch relevant documents and generate a response based on that specific information.

This approach makes AI smarter and more contextually aware, which is critical when trying to answer complex questions from scraped documents or websites. This accuracy boost is huge in customer service, where even small inaccuracies can make a big difference.

How Did We Set up an Enterprise RAG Pipeline?

Step 1: Creating Accurate Embeddings

One of the key elements in our approach is the creation of high-quality embeddings. If you’re unfamiliar, embeddings are numerical representations of data—text, images, or tables—that maintain the semantic meaning. Think of them as the backbone of any search or matching system.

Here’s where we’ve seen improvements. By fine-tuning pre-trained models, like OpenAI’s embeddings, we’ve generated more accurate vectors to find relevant content. These embeddings allow our system to quickly search and retrieve the most helpful information for any query.

Step 2: Scraping From a Variety of Sources

Scraping websites and documents is another area where we put in the work. We’ve built a robust scraping pipeline that handles inconsistencies in HTML structures, different file formats, and embedded content. We don’t just limit ourselves to simple web pages; the Kommunicate system can pull information from many sources, such as:

- Webpages

- PDFs

- Word documents

- Tables

- Excel sheets

- CSVs

- Zendesk Help center

- Salesforce Knowledge base

- And many other

When scraping tables or other structured data, we’ve developed methods to extract that information and make it searchable. This allows our system to handle more complex documents that other tools might struggle with.

The variety of content we can scrape adds a layer of versatility to our system, making it more adaptable to different industries and use cases. And this is another area where we’ve seen our approach outperform more rigid competitors like Intercom.

Step 3: Filtering and Arranging Documents Before Sending to OpenAI

Once we’ve scraped the data, not everything gets passed to the AI model. We have a filtering system that ensures only the most relevant documents or data chunks are used in response generation. We use a mix of techniques here—vector similarity, relevance scoring, and keyword matching—to filter out irrelevant or redundant information.

Another key part of our approach is arranging the documents before sending them to OpenAI. We don’t just throw a pile of scraped data at the model and hope for the best. Instead, we rank the documents by relevance, ensuring the AI gets the most useful, contextually accurate information first.

When multiple matching paragraphs are extracted from a document, we arrange them in the correct order. Imagine being given paragraphs from a story—if the paragraphs are jumbled, the story won’t make sense, right? It needs to follow the same chronological order to retain its meaning.

This filtering and arrangement system makes the AI more efficient and accurate. The result? More precise answers, quicker response times, and fewer mistakes.

Step 4: Ensuring Contextual Relevance

One of the biggest challenges in AI-driven search is ensuring the responses are contextually relevant. This is where many systems fall short—especially when dealing with complex conversations.

For example:

Assistant: Welcome to the U.S. Road Trip AI assistant!

User: What’s a popular road trip route in the U.S.?

Assistant: Route 66 is a famous road trip stretching from Chicago to Santa Monica, covering 2,400 miles.

User: What are some must-see stops along Route 66?

Assistant: Some popular stops include the Grand Canyon in Arizona, the Cadillac Ranch in Texas, and the Gateway Arch in St. Louis.

User: What’s the best time to travel?

Assistant: The best time to travel on Route 66 is from late spring to early fall (May to October), when the weather is more favorable, and most attractions are open.

This is where contextual understanding comes in; the user question alone doesn’t provide information about the specific place the user refers to. But, if you look at the chat history, you know what information to provide.

Kommunicate adds a layer of contextual understanding to understand the chat conversation and maintain continuity across sections of documents. The result is accurate and highly relevant answers to the user’s query.

In practice, this means better results for our customers, especially when using our system to answer highly specific or technical questions.

Step 5: Prompt Engineering

This is one of the critical parts. Instructions passed to the AI system should be specific. AI models must be instructed carefully about getting answers from provided documents only; otherwise, the answers coming from the internet are at risk.

Kommunicate maintains an internal prompt to handle such corner cases.

How Do We Measure the Accuracy of Answers?

At this point, you’re probably wondering how this stacks up against other big players in the field, like Intercom. We’ve done the comparisons, and the data speaks for itself.

Regarding accuracy, our system consistently outperforms Intercom, especially when matching the proper documents to a user’s query.

Where Intercom might return broadly relevant answers that lack depth, our system can dig deeper and return more precise, context-aware responses.

We’ve also seen improvements in response time and overall user satisfaction. By filtering and arranging the documents before sending them to the model, we’ve cut down on the time it takes to get an accurate answer—incredibly important for users.

We’ve trained both the Fin chatbot and the Kommunicate AI chatbot on the following website of one of our clients:

- CallHippo – https://callhippo.com/

- QuickTech – https://www.quicktech.la/

- Supercell Games – https://supercell.com/en/

The following Documents:

- Banking – It’s Origins and Meaning – https://drive.google.com/file/d/1_Nsj9yGWMx_HL_pv7rj6n2XWl1UTwRXk/view?usp=sharing

- WEF – Education in India Report – 2022 – https://drive.google.com/file/d/1oc1diABlSYKdYEFtHiAK2kHALOIo_6Fn/view?usp=sharing

- National Education Policy Document – India – 2020 – Education policy.pdf

Once both the chatbots were trained, we asked them the following questions:

1. What are the key features of CallHippo’s virtual phone system?

2. How does CallHippo’s call analytics help businesses improve customer service?

3. What are the pricing tiers offered by CallHippo, and what features do they include?

4. How quickly can businesses set up their phone system using CallHippo?

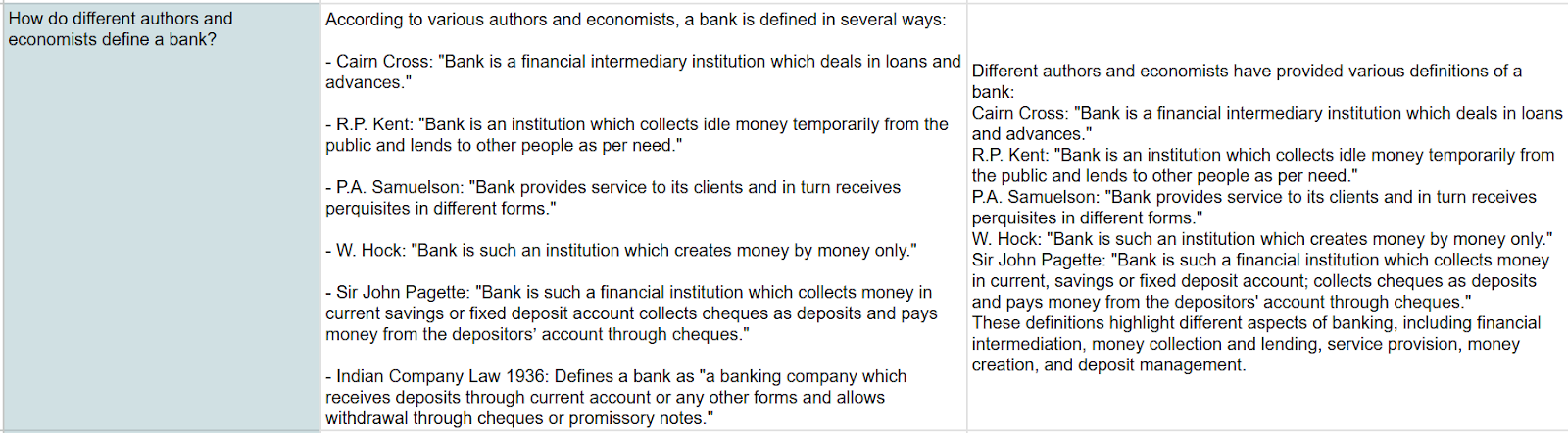

5. How do different authors and economists define a bank?

You can access the complete list of questions and the answers provided by Kommunicate and Intercom through this Excel sheet.

After these answers were given out, we rated them for accuracy (whether they matched the information) and quality (whether the answers were formatted well, used proper language, and were accurate).

For example, in this question – “How do different authors and economists define a bank?”

Both chatbots accurately report back on definitions from various authors. However, the Kommunicate reply is better formatted and readable. So, both chatbots were accurate, but the Kommunicate reply was of higher quality.

You can check out the full list of answers and questions from both chatbots in this Excel Sheet.

The Results: Kommunicate v/s Intercom

We asked 85 questions from both chatbots and the results were as follows:

| Kommunicate | Intercom | |

| Correct Responses | 84 | 84 |

| Quality Responses | 82 | 63 |

| Accuracy % | 98.82% | 98.82% |

| Quality % | 97.61% | 74.11% |

The Verdict

To sum it up, our approach to document and website scraping—powered by RAG and enhanced by our focus on embeddings, filtering, and contextual understanding—has enabled us to achieve better results than competitors like Intercom. We’re delivering more accurate, faster, and contextually aware responses, which makes a huge difference in real-world applications.

We’d love to chat with you if you want to see how this could work for your business. Reach out, and we can dive into how our system can solve your unique challenges.

As a seasoned technologist, Adarsh brings over 14+ years of experience in software development, artificial intelligence, and machine learning to his role. His expertise in building scalable and robust tech solutions has been instrumental in the company’s growth and success.