Updated on February 12, 2025

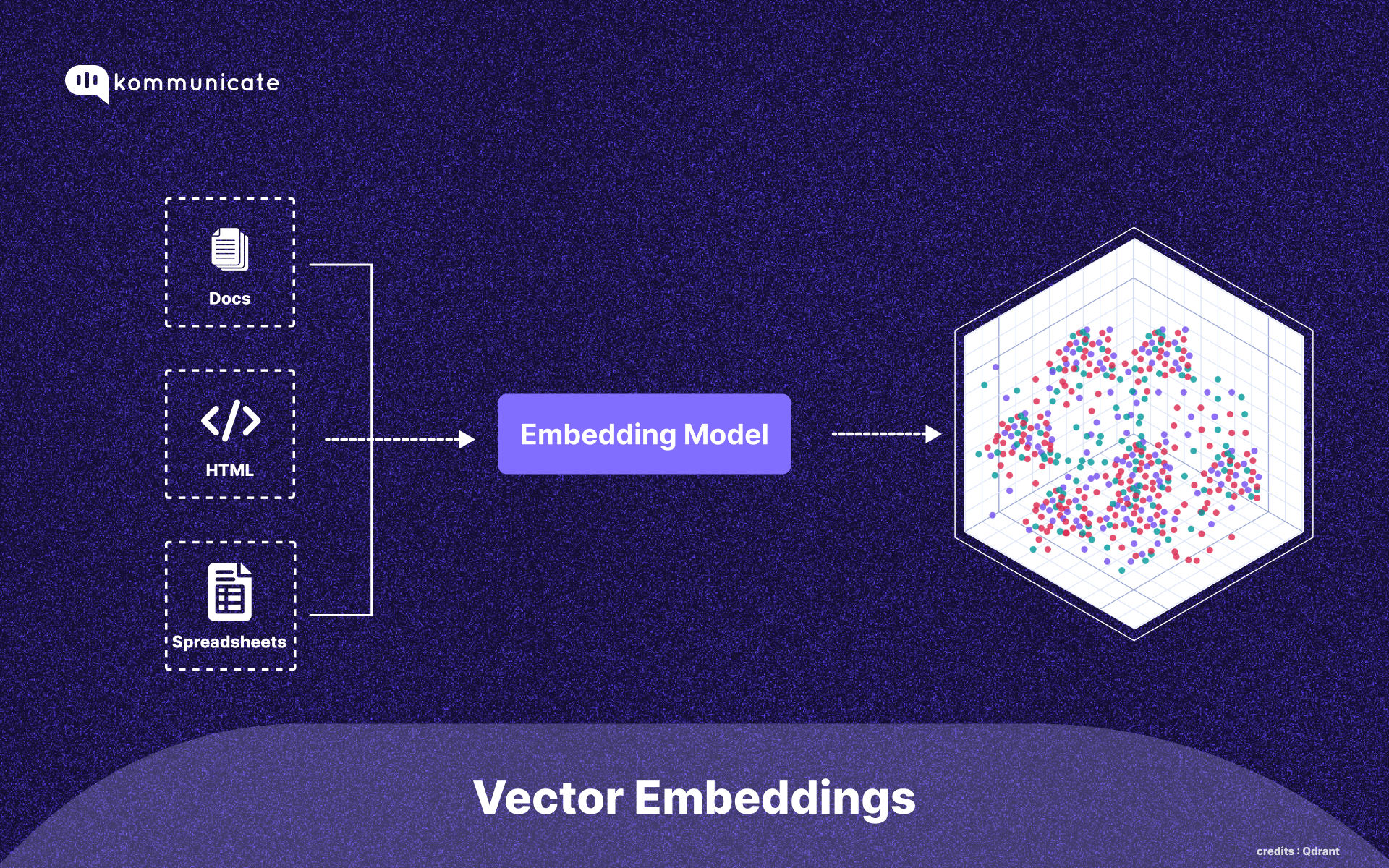

One foundational concept that makes LLMs possible is vector embedding. The basic idea behind this is simple: ML models require numbers to function, and vectorization converts different kinds of data into text.

Embedding techniques are prevalent across AI technology today, in the usual suspects like ChatGPT and Claude, as well as in Google search, voice assistants, and everything else.

We use them in our RAG-based models for customer service as well.

But, like with everything in AI, the science of vectorization has also undergone a sea change and given rise to jargon that complicates the process. So, today, in this guide, we are cutting through the jargon and telling you how vectorization actually works.

First, we’re starting with the critical topic: “What are vector embeddings, and how are they generated?”

What are Vector Embeddings?

Machine learning works with numbers and matrices built out of them.

However, in real life, data is more complex. Whenever you encounter a dataset with a large volume of text or something else, it needs to be turned into a vector (basically a list of numbers) before you can use it for ML.

How are these created? You map the distance between two data sets by checking their similarities.

So, if we have two sentences:

- I love you.

- You love me.

We can represent them using the following table:

| Word | Sentence 1 | Sentence 2 |

| I | 1 | 0 |

| love | 1 | 1 |

| you | 1 | 1 |

| me | 0 | 1 |

So, the vectors are:

- [1,1,1,0]

- [0,1,1,1]

Where the cosine angle is 0.667 (the closer the cosine is to 1, the higher the similarity).

Now, this angle maps the similarity between the two sentences. And this similarity mapping starts showing a remarkable result.

Since similar words are often used in similar contexts (For example: We often use ”sun” and “bright” together), we can map out meaning in the real world to the distance in vector space.

So, now machine learning algorithms can associate:

- “Cat” with “Pet”

- “A little boy is walking” with “A little boy is running.”

- “Jack and Jill” with “Went up the hill.”

With these distances, vector embeddings help your LLMs map word meanings and probabilistically guess the following words in a sequence.

Now, the most common vectors in ML are dense vectors; let’s talk about them.

What are Sparse and Dense Vectors?

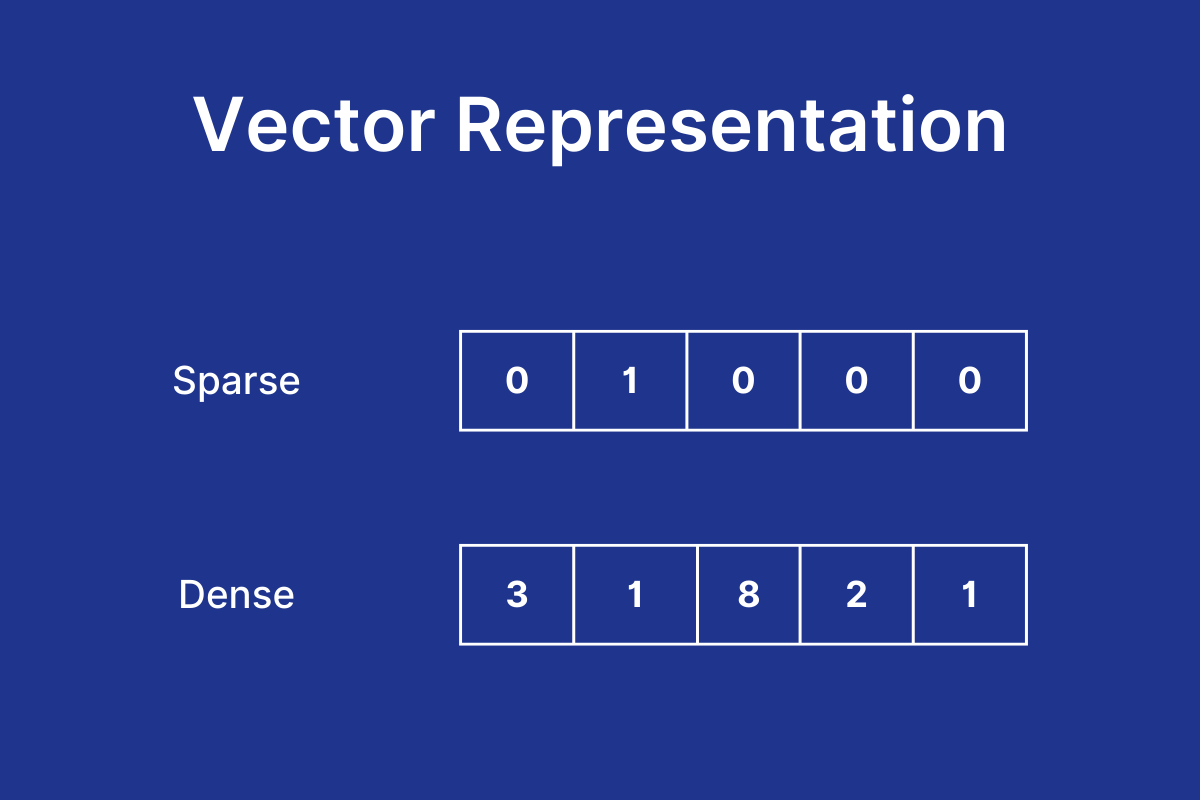

Two types of vector embeddings are used: Sparse and Dense vectors.

Sparse Vectors

When you have a large volume of data that you need to vectorize, you’ll probably want to compress it. This is what sparse vectors do.

It classifies the data it receives through syntax and indexes distances between them. So, in the case of the two sentences:

- A goes to the house of B.

- B goes to the house of A.

It will give you a near-perfect match, even if the sentences are semantically different.

This occurs with our first example of vector embeddings because we use a sparse embedding structure, and two semantically similar sentences display a greater distance.

In ML, sparse embeddings will only store relevant data. If you were to vectorize your documents, these vectors would only capture specific tokens and their relational positions in a database for quick reference.

This can be very important for a medical search bot, which needs to quickly find specific word combinations in a massive amount of text.

But, these vector embeddings are not great at semantic understanding. But ChatGPT and our customer service chatbots need to understand semantics, so we move to dense representations instead.

Dense Vectors

Dense vector embeddings are highly dimensional. Mathematically, these are vectors with a lot of non-zero values.

We’ve taken words and sentences and added different dimensions to them. 786 dimensions are standard, which are determined by a neural network. These dimensions capture the contextual meaning of the words and map them out in a vector space.

This is what word2vec did with their models. Their approach was two-fold.

- Skip-Gram Model – They used a word to create a vector with random weights and tried to predict the context using neural networks. The weight of the neural network that predicted the context correctly was then chosen as the vector representation.

- Continuous Bag of Words model – The embedding is one word, and the neural network tries to represent the embedding given the context perfectly. The hidden layer weights that make the correct prediction are chosen as the vector representation.

In the first case, the model tries to maximize the probability of finding the proper context, while in the second case, the model attempts to reduce the likelihood of ending up with the wrong word.

You can see how these models can create a semantic representation of large amounts of text at scale.

While word2vec was legendary and essential, it’s not what we use in our Generative AI chatbot models. For that, we need to move on to more recent discoveries.

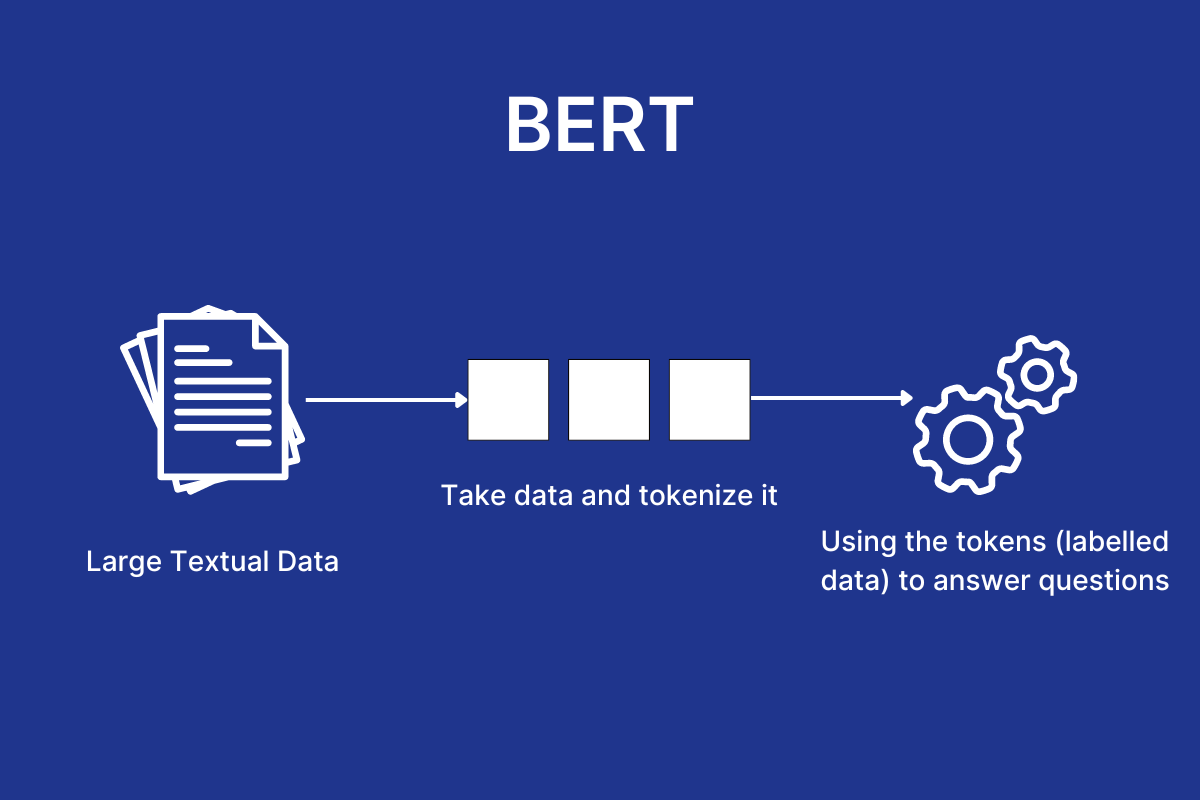

Most Used Vector Embeddings: BERT and Future Models

Word2vec could only work with one word (or n-gram) at a time. It was good at identifying words in simple contexts but struggled when the word embodied different contexts in the database.

For example, word2vec struggled with the meaning of “bank” given these two sentences:

- The commercial bank is closed.

- The river bank of the Thames is open.

BERT was a transformer model that could pay attention to the context of a word. It has moved on from analyzing a single word and could predict its meaning given its context. So, in the case of the previous sentences, it could classify the bank as a financial institution when it comes to the word “commercial.”

Plus, BERT was bidirectional (though non-directional would be a better description). It sampled the database randomly to understand contexts and get a better overview of the data (unlike word2vec, which moved from one word to the next and was limited).

Recently, BERT has also evolved with the new S-BERT model. This model can more easily analyze entire sentences at one time and is more computationally efficient.

Automate customer queries, streamline support workflows, and boost efficiency with AI-driven email ticketing from Kommunicate!Parting Thoughts

Vectorization is a process in which we take large amounts of data and represent them numerically. Since computers can understand numbers, we can use vector embeddings created with this method to help computers understand complex data like texts and images.

Now, vectors can be sparse (filled with zero values), or dense (high-level representations), and dense vectors are critical to how computers understand human language.

Dense vectors are possible partly because of the seminal “word2vec” paper from 2013, which gave the entire field of NLP a massive boost. It provided an algorithmic and efficient method for representing textual data as vectors.

Over the years, Word2vec has been replaced by newer transformer-based models (BERT and sBERT). However, these concepts are still critical to the current Generative AI practice. ChatGPT can understand our prompts because it uses modern vectorization models to understand semantic contexts.

And, of course, these data representations also power the customer support chatbots we create.

As a seasoned technologist, Adarsh brings over 14+ years of experience in software development, artificial intelligence, and machine learning to his role. His expertise in building scalable and robust tech solutions has been instrumental in the company’s growth and success.