Updated on June 30, 2025

OpenAI’s ChatGPT has brought AI’s potential to the mainstream by effortlessly handling tasks ranging from code generation to blog posts, stories, and poems. With ChatGPT reaching 100 million monthly users within two months, it holds the record as the fastest-growing consumer application. Initially, individuals favored ChatGPT, but enterprises quickly saw its potential for customer support and automation. However, a significant challenge emerged: businesses needed efficient methods to train chatbots on proprietary data. The introduction of website scraping now offers a solution.

What is Website Scraping and Why is it Useful?

In the context of chatbots, website scraping refers to the technique of extracting data directly from websites to train and enhance the capabilities of chatbots. Chatbots trained on this scraped data are able to provide more contextual and accurate responses to user queries based on the information available on the website.

Website scraping is a technique for extracting data from websites to enhance a chatbot’s ability to answer questions accurately based on real-time content. This method enables businesses to turn their site content into training data for chatbots, so the bot’s responses reflect the latest product details, policies, or FAQs. Using a web scraper API simplifies this process by automating data extraction, ensuring consistent updates, and minimizing manual effort in keeping chatbot knowledge bases current.

Although website scraping might seem novel in this context, it dates back to 1993, when Matthew Gray at MIT created the Wanderer, a bot designed to measure the size of the web. Since then, tools like JumpStation and Beautiful Soup (a Python-based HTML parser) have simplified scraping tasks, helping developers gather site information quickly. Today, visual scrapers allow users to highlight sections of a webpage and convert that data into structured files, simplifying information retrieval.

How Does Website Scraping Work in Training Chatbots?

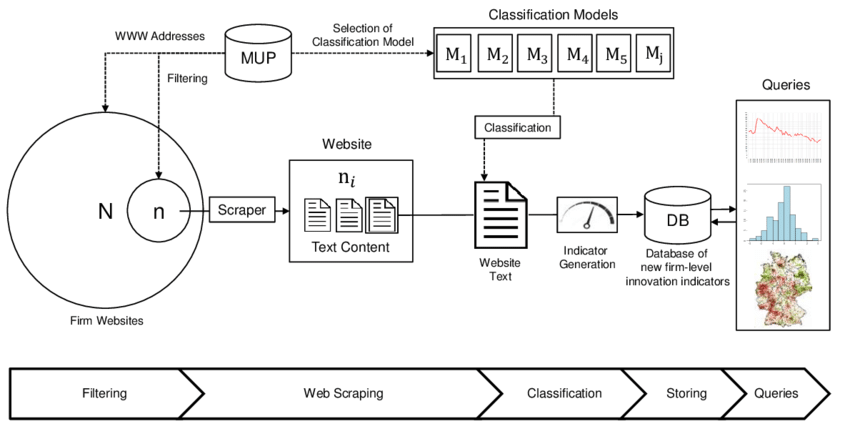

Website scraping extracts content from your site’s HTML structure to create training data for the chatbot. Here’s an overview of the process:

1. Data Extraction: The scraper navigates your website’s HTML content to collect specific text data. For example, if using Python, the bot identifies and extracts information within designated tags or sections (e.g., <p>, <h1> tags).

2. Chunking Text: The extracted data is segmented or “chunked” into smaller, manageable sections, which ensures the chatbot can process and reference text more efficiently during interactions.

3. Embedding Creation: These text chunks are then transformed into “embeddings,” which are dense numerical representations. Embeddings capture the meaning and context of the text, allowing the chatbot to interpret user queries accurately. Embeddings are crucial in NLP, supporting tasks like search, classification, and contextual relevance.

4. LLM-Driven Responses: Once embedded, the data is accessible to the large language model (LLM), such as ChatGPT, to generate responses based on the content of your site. When users ask a question, the chatbot references these embeddings to deliver accurate, contextually relevant answers.

Legal and Ethical Considerations of Website Scraping

Before implementing scraping, it’s essential to understand its legal and ethical limitations:

- Terms of Service: Many websites prohibit scraping, and scraping content without permission could violate their terms.

- Data Ownership: Scraped data may be proprietary; using it for business purposes without permission could result in legal issues.

- Privacy Concerns: Ensure that scraping excludes personal or sensitive user data, in line with privacy regulations (e.g., GDPR).

How to Setup a Chatbot with Kommunicate’s Website Scraper: A Step-by-Step Guide

Watch the video tutorial here:

By now, you know a bit about scraping a website, how the technology grew, and what lies under the hood of a website scraper powered by an LLM.

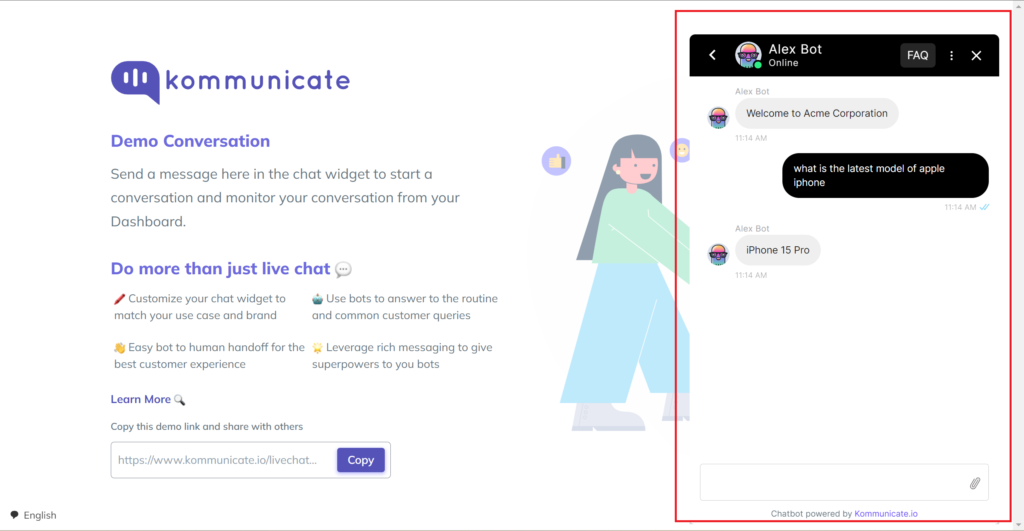

To help you build a site-trained chatbot, here’s how to use Kommunicate’s Kompose bot builder.

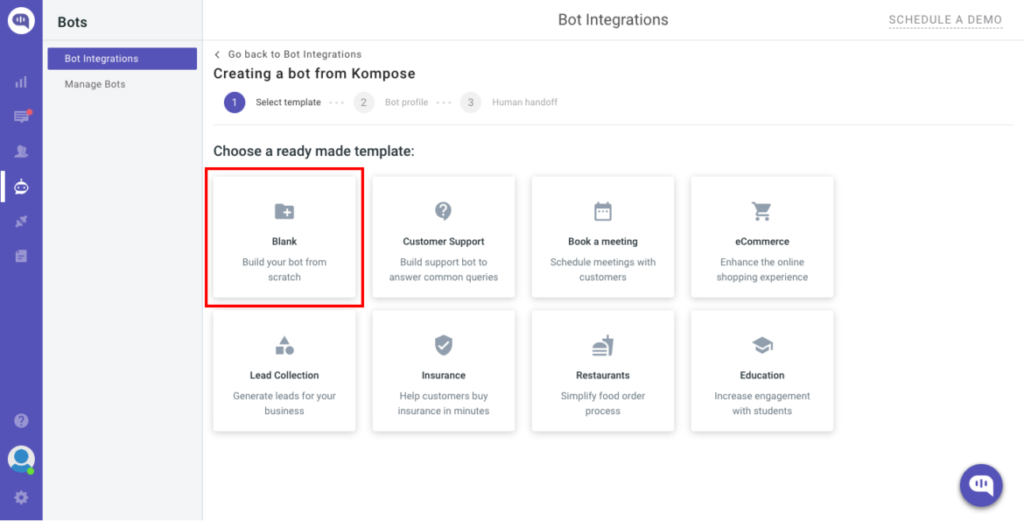

Step 1: Accessing the Bot Builder

- Log in to your Kommunicate dashboard (or sign up if you’re new).

- Navigate to Bot Integrations and select Create a bot from Kompose, using the blank template.

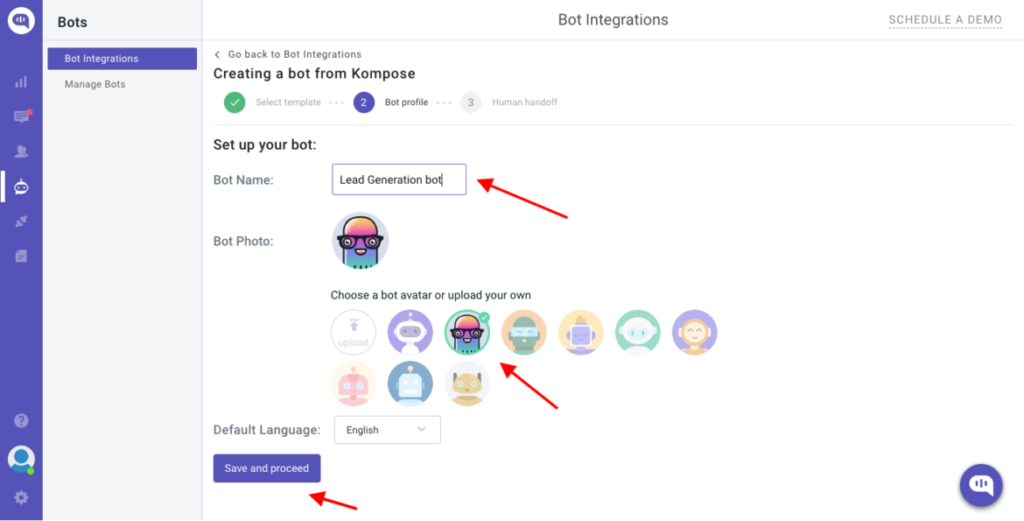

Step 2: Configuring Bot Profile

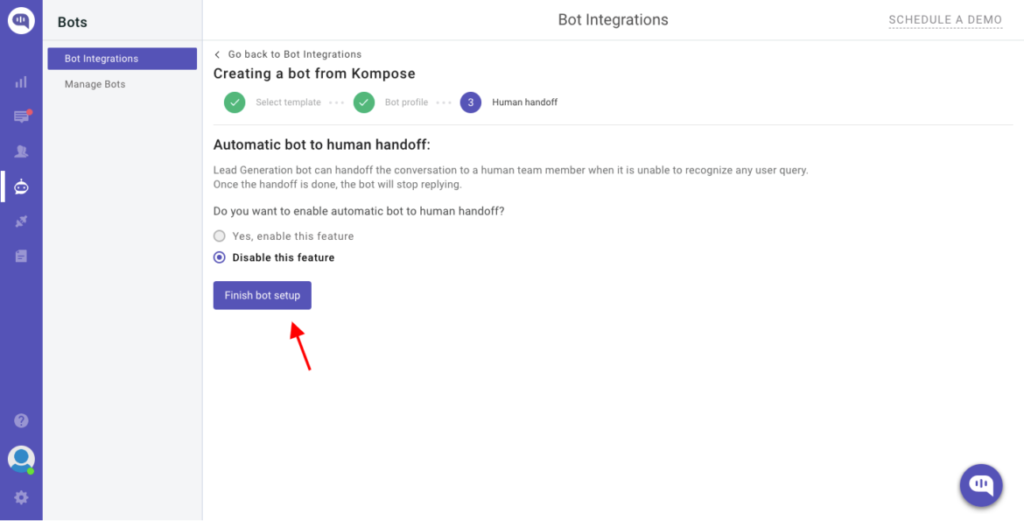

- Assign your bot a name and language in the Bot Profile section.

- You can also enable or disable automatic human handoff for seamless support.

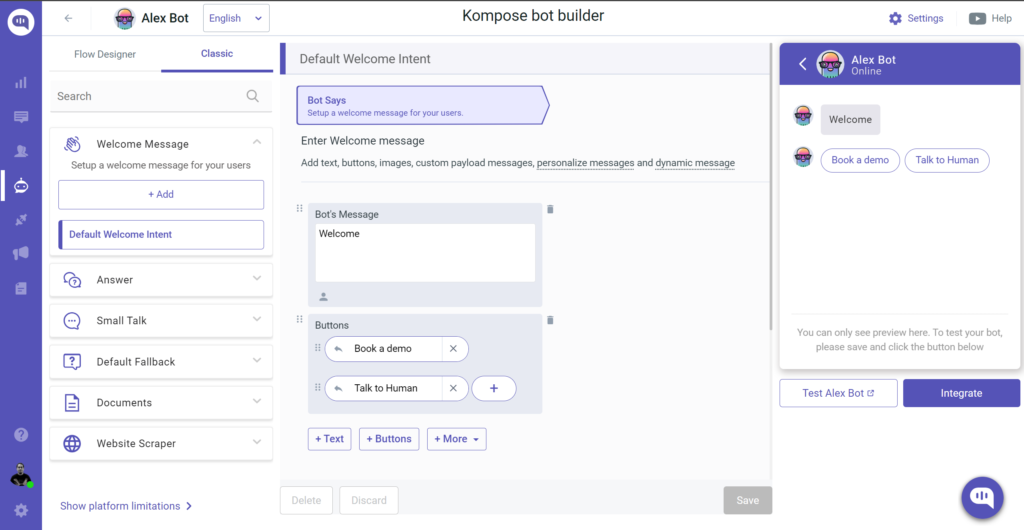

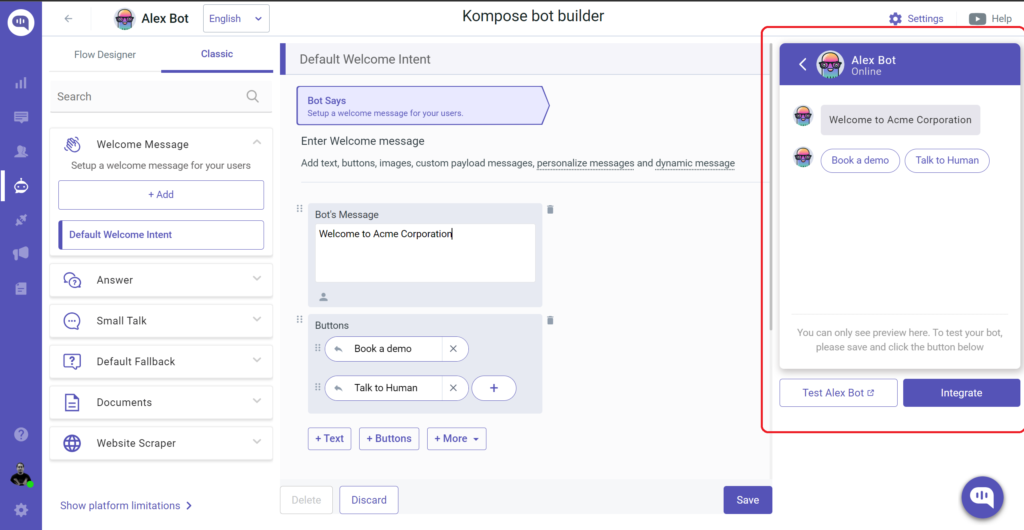

Step 3: Customizing Bot Responses

- After creating your first bot, you’ll be transferred to Kommunicate Flow Designer. By default, we’ve already prepared a basic conversation tree there to help you get going.

- Click on the Bot response block to open its edit window. You can customize the conversation flow the way you want.

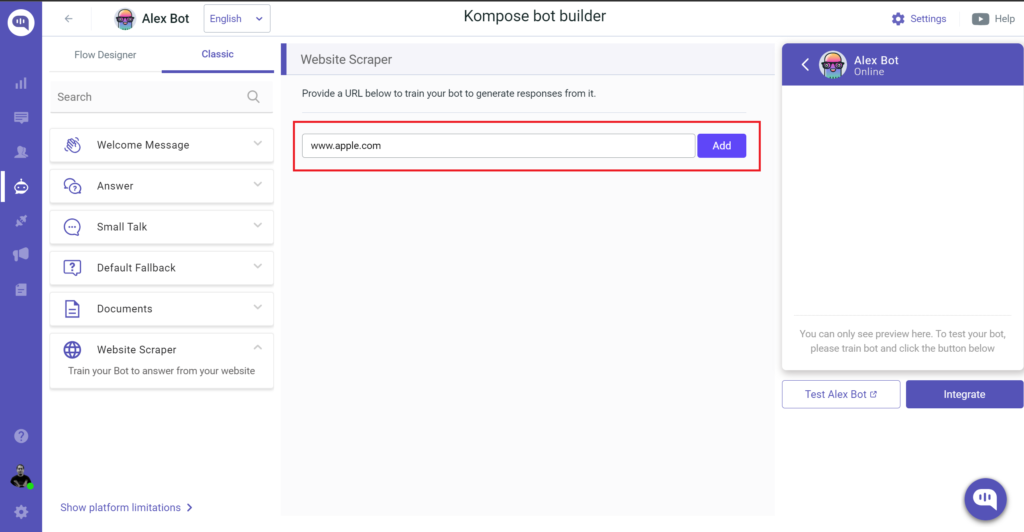

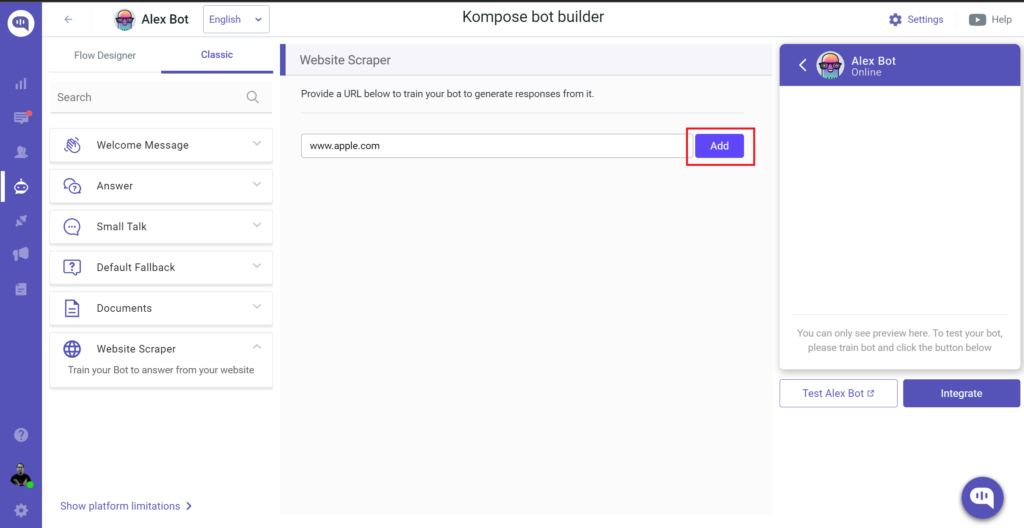

Step 4: Training the Bot with Website Scraping

- Now comes the fun part, where we actually convert our website into a chatbot. Click on Website Scraper and input the website URL you want to train the chatbot on.

- Finally, click on “Add” and then Boom, the scraper actually has now converted your entire website into a chatbot. This scraped content enables the chatbot to respond with contextual, up-to-date answers based on your website’s latest content.

Step 5: Testing Your Chatbot

- The final step is to test your generative AI chatbot. Use the Test Chatbot feature to ensure it delivers accurate responses.

- Experiment by asking questions related to your site’s content to evaluate its effectiveness. Congratulations, you have just converted your entire website into a chatbot.

Real-World Applications and Advantages of Web-Scraping Chatbots

Here’s how web-trained chatbots benefit businesses:

1. Data collection

Quickly extracts product information, pricing data, or customer feedback for user-friendly interactions.

2. Sentiment analysis

Analyzes user sentiment from reviews and feedback, offering insights into common issues or positive features.

3. Quick Deployment

Enables fast setup and go-live, making web-trained chatbots a convenient solution for businesses.

4. Cost-Effectiveness

Lowers costs by eliminating manual data collection and simplifying the chatbot’s ongoing training process.

5. Adaptive Maintenance

Automatically updates the chatbot as website content changes, reducing the need for regular retraining.

Alternatives to Website Scraping for Chatbot Training

For readers interested in other chatbot training methods, here are some options:

- Manual Data Uploads: Manually upload CSV or JSON files to train the bot on specific data. This approach is time-intensive but may be more precise.

- API Integrations: Many businesses use API integrations to feed data into chatbots, ensuring a continuous flow of up-to-date information without scraping limitations.

- Knowledge Bases: Training chatbots on FAQ pages or help desk articles ensures responses based on reliable, curated content.

Conclusion

Transforming your website into a chatbot using web scraping offers businesses a powerful tool to enhance customer support and streamline operations. By leveraging AI-powered email ticketing and customer service email automation, businesses can integrate web-trained email automation to deliver accurate, contextual responses in real time. Ensuring up-to-date information is always available, while addressing legal and ethical considerations, platforms like Kommunicate can further amplify your strategy with compliance and efficiency.

CEO & Co-Founder of Kommunicate, with 15+ years of experience in building exceptional AI and chat-based products. Believes the future is human + bot working together and complementing each other.

Comments are closed.