Updated on February 12, 2025

Many businesses have adopted AI to scale different functions across their workflow. New models like Open AI o1 are expected to drive this adoption further.

However, like every other technology, AI errors are risky. Problems with AI implementation and strategy can cause disruptions in your business,

We’ve been working with multiple enterprise clients globally to scale their operations with AI, and we’ve identified seven common AI mistakes. In this article, we’re going to cover these AI mistakes and how to solve them; you’ll learn about:

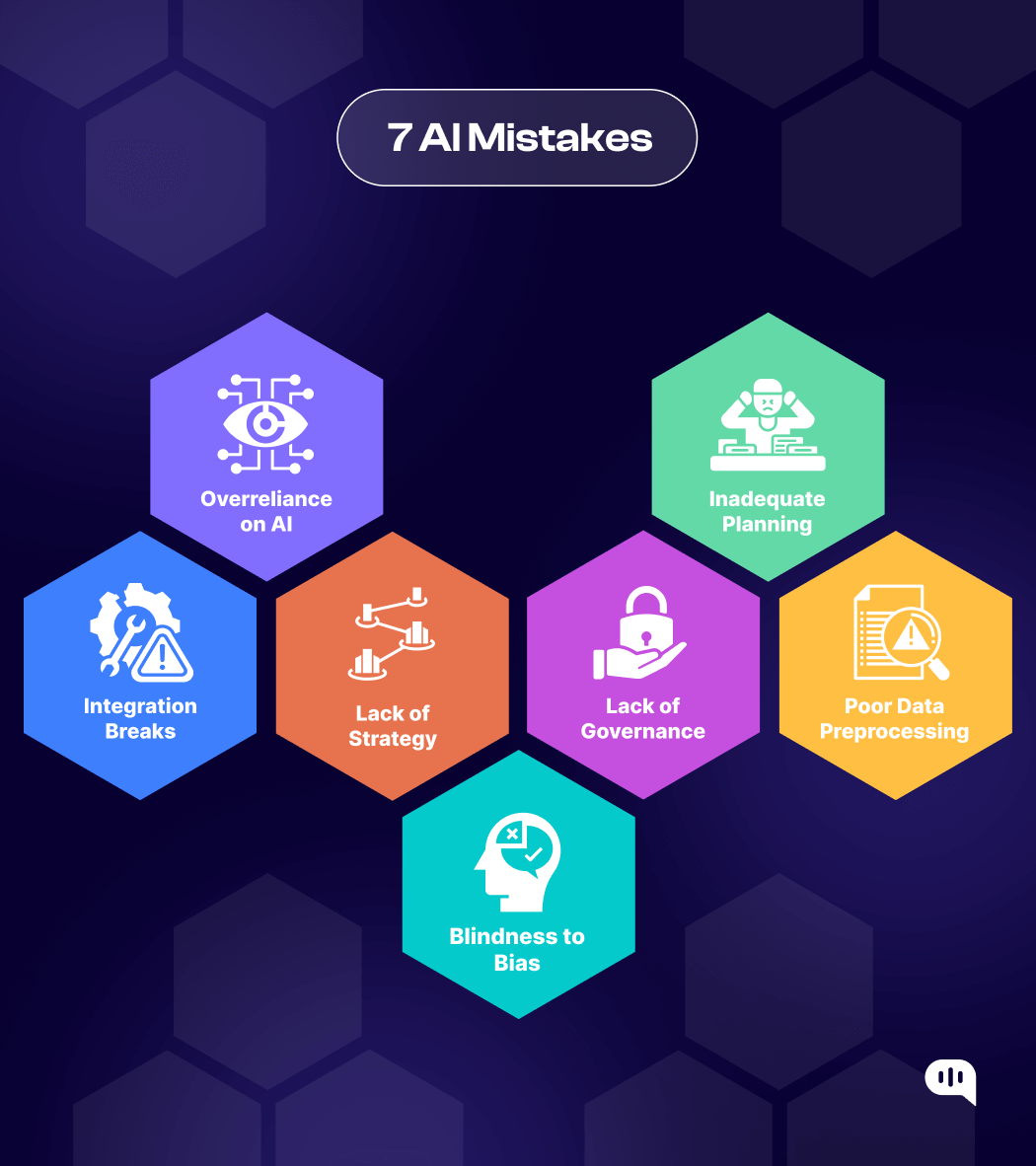

7 Common AI Mistakes

1. Overreliance on AI

Implementing AI with the expectation of a silver bullet is not a great strategy. Given AI’s current technological limitations, there can be many problems.

Let’s take the example of AI in customer service. LLMs are perfectly capable of mimicking human patterns of texts. However, they lack emotional intelligence.

So, instead of solving a customer problem, it might lead to increased frustration for the person. These AI errors can be exacerbated if your chatbot keeps sending the person in a loop while trying to answer a question.

AI can also falter in calculations and learn wrong patterns while training. For example, A prediction algorithm that used AI to understand which patients needed more critical care started excluding black patients because it began to rely on healthcare spending as a proxy for healthcare needs.

These problems can be solved with some basic tweaks. Let’s explore them.

Solution: “AI + Human” Strategies

Several “AI+Human” strategies can be used to address the overreliance on AI:

Bot-to-Human Handover

If you’re adding AI to your customer service process, building systems that hand over complex and challenging questions to a human agent is reasonably easy. This prevents an AI loop and helps your chatbots perform better.

Double-Checking for Bias

Any predictive algorithm must have essential checkpoints to see whether the AI system has learned the correct patterns.

Ideally, you want a human being that checks your AI models for mistakes and fixes them. Also, you need to create systems that acknowledge failure and do a handoff to humans.

2. Integration Breaks

Your LLM models aren’t pre-loaded with specific information about your business and industry. You will often be using an RAG system to add information to your system, including several complex APIs that connect with your AI.

If these APIs fail, they may cause chatbot errors. You will get incomplete information if your AI systems aren’t seamlessly connected to your data sources or platforms.

Additionally, if there is an integration problem with your website or with marketing channels, it will affect your overall customer experience. Similarly, an integration break with a data source can lead to incorrect answers from your predictive analytics AI algorithms.

Solution: Test Every Connection

Every API connection is governed by sending rules and code. If you’re using AI, it’s better to test it at total capacity and address points of failure.

Also, remember that every platform you integrate with will have different rules associated with their APIs, and you shouldn’t violate them.

We recommend that you work with a vendor like Kommunicate that already has a pre-built suite of integrations so that you can minimize work on your end.

3. Lack of Strategy

Jumping headfirst into the AI wave might not be as fruitful in the long run. If the AI implementation is done quickly and without thinking about overall business goals, then there can be multiple points of failure.

In customer support, this can happen when you launch AI into a channel where you receive very few messages. Since AI is built for scale, a platform with few messages will not give you a high ROI.

Similarly, this problem can affect predictive models as well.

For example, Zillow’s implementation of an algorithm predicted house prices and made offers based on those predictions. Ultimately, the AI error rates were up to ~7% and caused millions of dollars in losses.

A strategic business proposal would’ve estimated this error rate and built humans into the system for proper appraisals.

Solution: Building an AI-First Strategy

The basic things you’ll need for an AI-first strategy are as follows:

- Understand customer needs and use them as a cue for implementation.

- Calculate error rates by doing small launches and collecting customer feedback.

- Decrease error rates by incorporating new and varied training data.

- Use checkpoints to ensure that AI doesn’t give out wrong information.

- Prioritize value to the customer while implementing AI.

These few points can ensure that your AI launch follows the proper strategy and is aligned with first principles.

4. Blindness to Bias

Bias is an ongoing problem with AI systems. AI systems (including advanced LLMs) can give biased results unless trained on varied data.

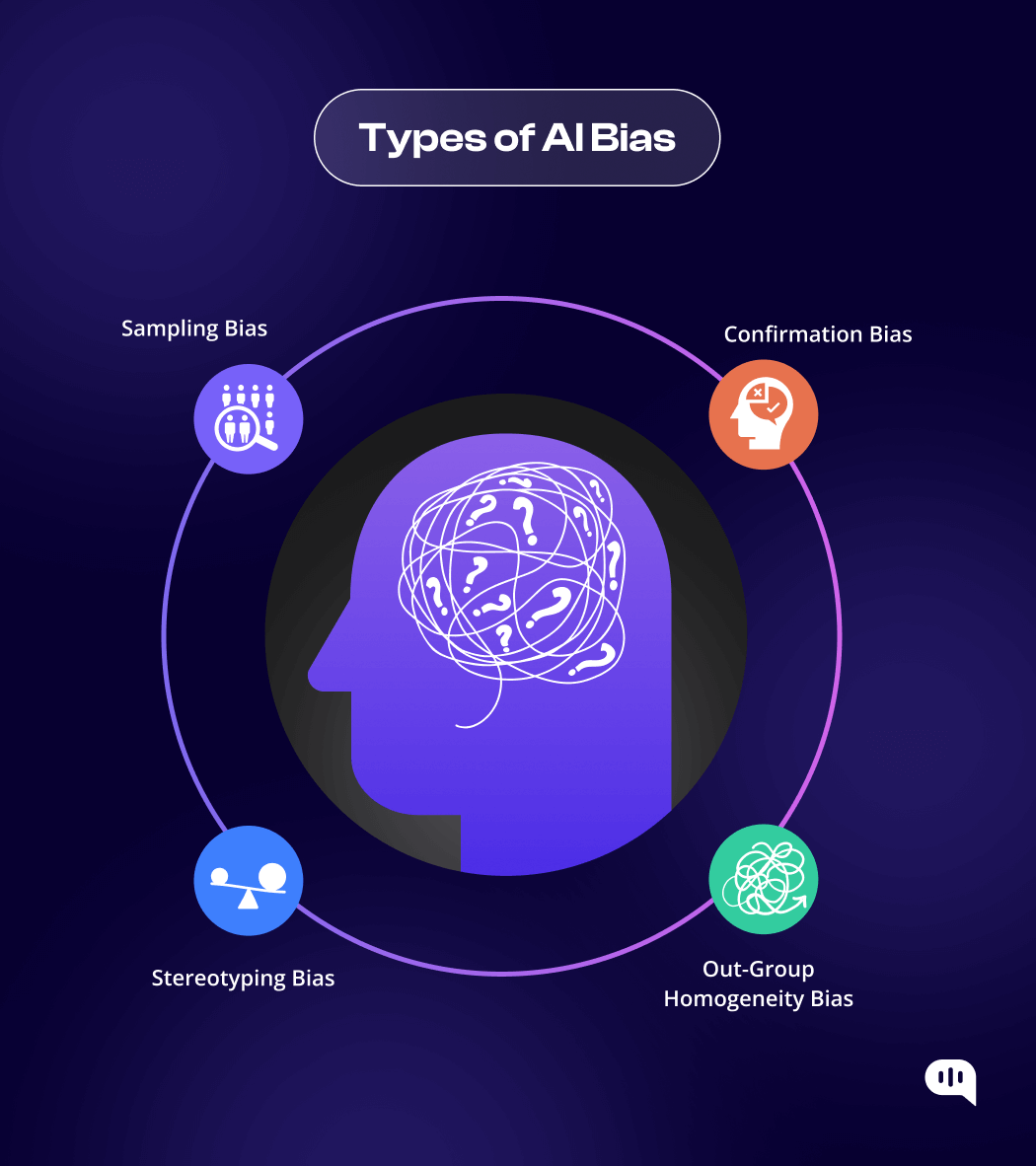

This is a complex issue that can be caused by:

- Sampling biases

- Confirmation bias

- Stereotyping bias

- Out-Group Homogeneity bias

The technological issues that lead to bias from AI systems are an area of active research. However, there are several things that businesses can do to prevent bias within their own AI systems.

Solution: Addressing Data Scarcity and “AI + Human” Workflows

One of the core things that leads to bias from AI systems is the lack of varied data. As a business adopting AI, if you can provide different data sources with a lot of variance, your AI model will be relatively unbiased.

Additionally, it’s necessary to create checkpoints where humans check AI’s work and proactively address potential biases.

Proactive fine-tuning and varied data points can address a lot of bias in AI.

5. Lack of Governance

AI governance is understanding AI responses and protecting them against various cyberattacks. Hackers can use prompt injections and other hacks to exploit your AI systems. To address these problems, businesses used the cybersecurity tactic of red-teaming. This effectively addresses the faults within the system and provides your business with increased security.

Additionally, businesses also use several different methodologies to protect PII data when they use AI systems. These methods are all part of good AI governance.

Solution: Choosing the Right Vendor

Most businesses can’t run full-suite red teaming operations on AI systems. It’s better to choose AI systems that provide security from the get-go. Some points to prioritize are as follows:

- Choose a vendor with SOC2, HIPAA, and PCI-DSS certifications.

- Prioritize vendors with an ISO certification.

- Choose AI-agnostic vendors and use models from Open AI, Google, Claude, IBM, and other major players for their generative AI chatbots.

This will provide you with a vendor list where everyone prioritizes cybersecurity as a core principle and let you run your operations smoothly.

6. Poor Data Pre-Processing

We’ve already discussed how improper data can lead to AI bias in your systems. One of the common AI mistakes occurs with data preprocessing. If your data is not appropriately structured, chatbot errors will be multiplied.

For example, your customer might get incorrect information about your pricing if your pricing data isn’t pre-processed into a format that AI can understand.

Solution: Data Preparation

Preparing your data and adding proper metatags and labels is essential to training your AI systems.

Most vendors provide these services, but you might want to enquire about:

- How do they divide (or chunk) your data, and does it cause a loss of context

- Whether they have contextual understanding built into their chatbots.

- How they address numerical and text-based data

These fundamental questions will affect how data is processed in the AI system.

7. Inadequate Planning

Finally, if you launch an AI system to scale your business operations, you must plan your launch. This ensures that your customers are minimally affected by the technological changes and provides them a space to adjust.

It also allows your business to provide a better-aligned AI system for your customers.

Solution: A Implementation Framework

We recommend the following framework for the implementation of an AI suite:

1. Identify Customer Needs: Learn where AI systems are most needed and which areas will add maximum value to your customers.

2. Do Data Analysis: Use data from your customer service records and your CRMs to identify common questions your customers ask.

3. Execute a Pilot Program: Launch your AI chatbot to a small cohort of customers who can test and give you feedback.

4. Add Content and Re-Train: Once you understand the problems with your current AI systems, continue adding more data and re-training to maximize customer engagement.

This is a simple framework but a baseline for businesses to plan their AI launches.

Reduce response time, enhance support workflows, and improve customer satisfaction with AI-driven email ticketing from Kommunicate!Some Thoughts

Global businesses are widely adopting AI systems. While the recent developments in AI make it distinctly capable of scaling a business in different areas (customer service, development, etc), it also has problems.

Several technological improvements need to be made with AI. Algorithmic biases, problems with integration, data processing capabilities, and AI governance are all active research areas. These common AI mistakes can be proactively addressed with good technological planning and by choosing the right vendor for your AI services.

Additionally, it’s essential to plan and create strategies before implementing AI. Using humans within the process and using basic frameworks to run AI implementation can help in the process.

Our extensive experience with enterprises has found that these strategies can be enough to address common AI mistakes. AI is already here, so we hope these strategies can benefit your business.

CEO & Co-Founder of Kommunicate, with 15+ years of experience in building exceptional AI and chat-based products. Believes the future is human + bot working together and complementing each other.