Updated on April 24, 2025

According to McKinsey, emerging AI technologies add nearly $1 trillion to global banks annually. A significant part of this value will come from changing customer service functions.

We’ve written extensively about how customer expectations around engagement are changing. Post the COVID-19 pandemic, customers have increasingly adopted digital self-service channels while demanding increased personalization in receiving services.

AI fits this paradigm because it adds a personalized human touch to the conversations on digital self-service channels.

However, even as self-service channels become popular, voice has not disappeared. According to a recent survey, 70% of Gen Z people pick up phones for complex problems. In that context, the next frontier for customer support is evident. We need AI chatbots that work with voice and can automate customer service functions using this capability.

Intrigued by the capabilities of these voice AI chatbots? Here’s what we are covering in this article:

- What Can You Expect from AI Voice Chatbots?

- Benefits of Voice-Activated Chatbots

- Drawbacks of Voice-Activated Chatbots

- Future Trends and Research

- Conclusion

What Can You Expect from AI Voice Chatbots?

The launch of ChatGPT-4 Omni marked remarkable progress for AI chatbots. It was a multimodal chatbot that could converse through voice, images, and text.

While this model still translated voice to text before replying, improvements in software had reduced the latency to 232 milliseconds (with an average response time of 320 milliseconds).

One of the biggest challenges in implementing AI voice chatbots in customer service is this latency time. No one likes to sit through a long conversation where the opposite person (or machine) goes silent for a long time. ChatGPT 4-o introduced an advanced voice model that could respond to questions at a pace that makes it easier for humans to communicate with it.

This provides a small primer on what we should expect from AI voice chatbots for customer service.

What are the Features of Voice AI Chatbots for Customer Service?

1. Fast Responses

Customers talk faster than they write and expect similar responses. These models would need to reduce their response times to a fraction of a second.

2. Larger Context Lengths

Unlike in emails, where customers would write out their problems in a large chunk of texts, in voice calls, people usually lay out their issues throughout a multi-turn conversation. So, chatbots need to extract data from multiple conversation turns to get proper context behind the problem.

3. Imploring Questions

These chatbots need to be trained to sound like humans. This also means teaching them to ask follow-up questions about problems to get the bigger picture of the problem.

4. Emotional IQ

Research suggests that voice drives stronger social connections. To maintain this connection, voice chatbots must showcase higher emotional IQ.

Now that we understand the features that will drive the mass adoption of voice AI chatbots, we should also look at how this works.

- Also Read: 13 Great Customer Service Tips To Enhance Your Customer Experience

- Also Read: How to Build Your Customer Service Chatbot

- Also Read: Improve Customer Experience Using Automation

How do Voice AI Chatbots Work?

Most voice chatbots follow a simple process to answer questions:

- Customers Ask a Question – You ask the chatbot a question.

- The Voice Is Converted to Text – The chatbot uses a speech-to-text algorithm to convert your speech to text.

- Noise is Filtered – Unnecessary noises (background noises like the sound of the fan or AC) are deleted.

- Text is Processed – The resulting text is processed for vector embedding.

- Context is Added – AI checks the latest prompt against a database of previous responses for contextual understanding.

- An Answer is Found – The chatbot searches its memory for an accurate answer.

- Chatbot Gives the Answer – The chatbot generates text that is converted into voice and communicated to the customer.

As you can see, chatbots must follow a complicated process to provide contextual answers to customers. However, this voice conversation is possible with the new generation of LLM models like GPT 4 Omni and Gemini 1.5 Pro.

What happens when the adoption of these voice AI chatbots becomes widespread? Let’s understand the business context.

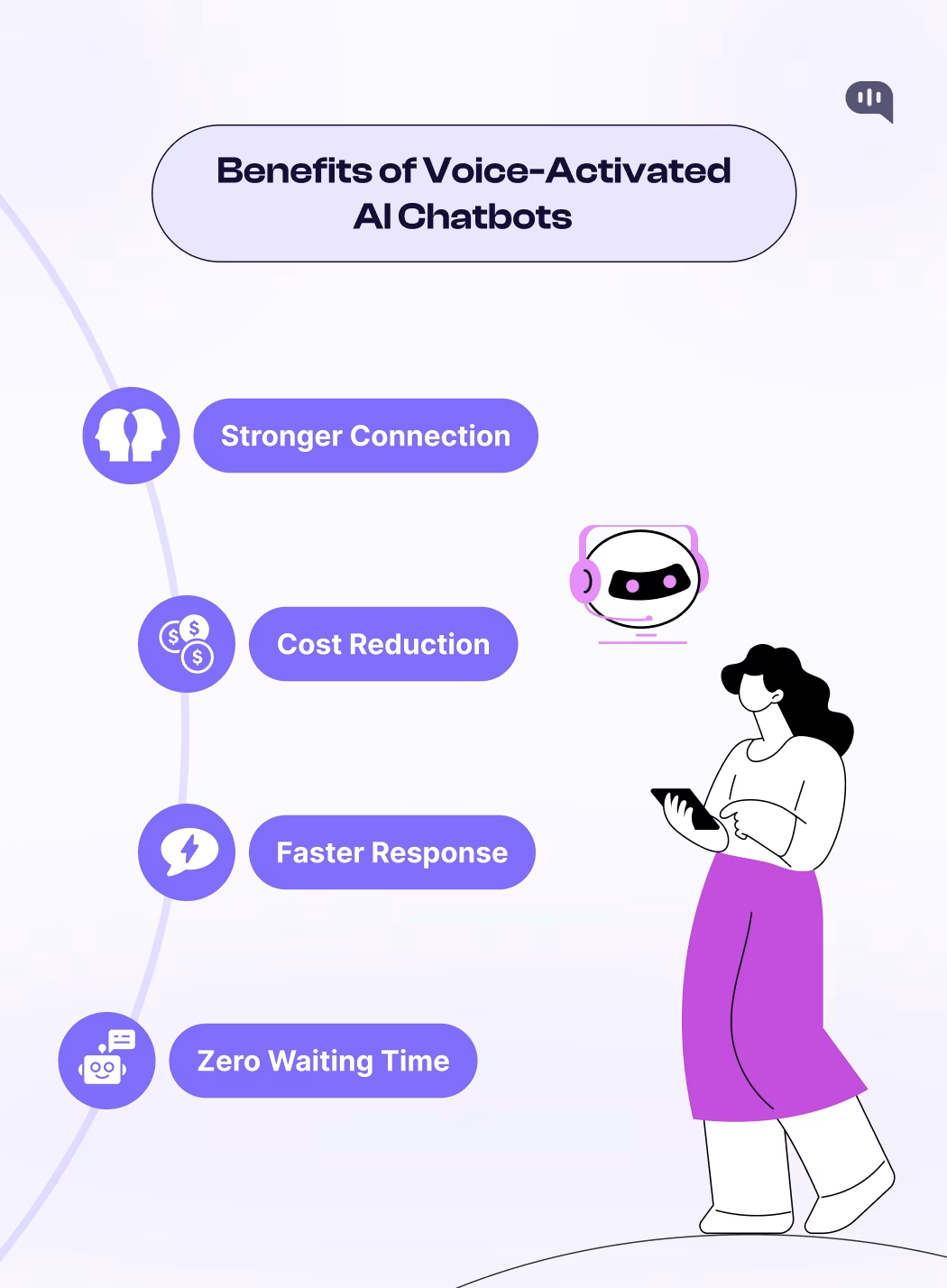

What are the Benefits of Voice-Activated AI Chatbots?

Beyond the benefits of AI chatbots in general, voice-activated chatbots offer additional benefits to a business. These benefits are:

1. Stronger Connections

As we pointed out earlier, people create more robust connections over calls. While these connections were fostered through human agents and in a manual manner, AI chatbots can automate the process and make better connections at scale.

2. Less Call Centre Costs

BPO and Customer Care costs at call centers are massive. On average, outsourcing a call to a service center costs you $6-$10/per hour. This number can be vastly reduced with AI, which provides the same calls at a fraction of the cost.

3. Faster Responses

AI finds information faster and may offer speedier resolution times than human agents, especially for call centers that act primarily as an interface for raising support tickets or quick inquiries into services.

4. Zero Hold Times

Given their scale, customers will face almost zero wait times with voice-based AI services.

These benefits are very beneficial to businesses. However, several things could be improved with the current implementation of voice-based chatbots.

What are the Drawbacks of Voice-Activated AI Chatbots?

The release of newer LLM models drives the mass adaptation of Generative AI chatbots. However, LLM models, especially their voice components, are not infallible. In that context, let’s address some quick drawbacks that are withholding this technology from mass adoption:

1. Hallucinations

While hallucinations have been largely combatted with RAG-based systems in AI chatbots, they are still an area of concern. Additionally, voice is a stronger medium for communication, and even marginal hallucinations can be a challenging problem for voice-based AI chatbots.

2. Latency Time

While 232 milliseconds is a good benchmark for quicker responses, humans converse at a much more natural pace. A human might instantaneously reply to some questions and take time for more complex questions. These chatbots would need to be trained to follow this natural pace.

3. Context Length

Telephone conversations can go on longer than textual ones. This requires an increased context length and memory. This is evolving, and the current state-of-the-art models can handle longer context lengths, too.

4. Security and Downtime Risks

Like all tech systems, voice-activated AI chatbots will have some security and downtime risks. Prioritizing providers with updated security certifications and providing uptime guarantees as part of their SLA will be better.

Remember, voice-based AI chatbots are far newer than LLMs. These drawbacks might become much less prevalent as the technology expands and more enterprises adopt them. In fact, given the possible benefits, even the current models might be viable for mass adoption soon, given some targeted fine-tuning.

However, several research projects are already building the next age of this voice-based tech. Let’s take a look at them.

Automate customer queries, streamline support workflows, and boost efficiency with AI-driven email ticketing from Kommunicate!What are the Future Trends Emerging in Voice AI Chatbot Development?

Several trends are currently dominating the field of research into voice-based AI chatbots. These are:

- Emotional Intelligence – Pi and similar chatbots provide highly emotionally intelligent responses to customers. This active research area can help customers build a stronger relationship with these AI chatbots.

- Multilingualism – One of the primary reasons for the success of BPO centers was that they could cater to a global audience through a centralized platform. AI also offers the same services at a marginal cost, but multilingual voice capabilities need further development.

- Emotional Understanding – As semantic understanding strengthens in LLMs, emotional understanding will also be necessary. This is the practice where a chatbot can understand a user’s emotional state and tailor its responses.

There are multiple other trends in the AI space that will affect voice-based chatbots. Multimodal chatbots and models that can do small actions independently will also become prevalent with time.

However, the three points mentioned above will be the primary improvements driving mass adoption of these systems.

Some Thoughts

Considering the rapid adoption of AI across the customer service domain, it is natural to assume that voice-activated AI will also be adopted soon. While the current generation of models has some challenges in mass adoption – namely latency, lack of emotional depth, and hallucinatory answers, they still provide multiple benefits that can be great for enterprises.

Here is a short video explaining How voice AI chatbots are changing customer support:

From our experience with live chatbots, we predict that these voice chatbots will reduce resolution and waiting times as soon as they are implemented. Further along, they will drive cost reductions and stronger customer connections.

With some cautious optimism, we can expect voice AI chatbots to be widely adopted shortly.

CEO & Co-Founder of Kommunicate, with 15+ years of experience in building exceptional AI and chat-based products. Believes the future is human + bot working together and complementing each other.